The Sad State Of SaaS

Summary

When assessing a SaaS offering for Machine Monitoring

Weighing the options is not always easy.

Centralized hosting of business applications dates back to the 1960s. That was back when users would pay for computing time on mainframes.

In the nineties, we saw the introduction of ASPs, Application Software Providers. ASPs mandated maintaining a separate instance of the application for each business. About a decade ago, mainstream SaaS providers appeared with multi-tenant deployments where users “shared” the computing environment. A key driver of SaaS growth is SaaS vendors’ ability to provide a competitive price with on-premises software. This is consistent with the traditional rationale for outsourcing IT systems, which involves applying economies of scale to application operations. Unfortunately, in retrospect, outsourced IT and SaaS still need to fulfill their promises.

Almost everyone can attest to the frustration of outsourced IT, especially when the technical group is not even on the same continent. Additionally, the faceless outsourced teams need to relate readily to the culture of the users. Similarly, SaaS providers provide a barebones support offering to facilitate their SaaS service. The application is hosted centrally, so the provider decides and executes an update. SaaS providers will upgrade software on a regular bases to address one user group at the expense of impacting all users. Since the service is in the cloud, users who want to work offline with their data face the potential of expensive egress charges. If the data accumulated by the users grows beyond the typical SaaS allowed size, then data storage costs are applied to the user. If the scope of the application grows, such as incorporating more connected devices and more data per device, then initial costs can also escalate. The application vendor has access to all customer data, and in notable cases recently, they have been selling metadata collected from their customer base. The application only has a single configuration, limiting the software’s ability to fit obvious unique conditions in almost all organizations. When a SaaS is considered the route for connecting machine assets and workstations on the shop floor to collect real-time streams of critical data, potential issues around latency, bandwidth, and saturation all come into play. An organization needs redundancy for their internet connection to ensure critical data and functionality can be recovered with the interruption of internet services. If operator interfaces are being used, then typical delays of one to ten seconds can occur between machine-generated and operator-generated data.

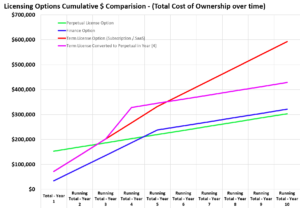

The most apparent failure of a SaaS approach is the ongoing service cost year over year. Where most perpetual licensing costs are absorbed over a short period, the year-over-year costs accumulate and ultimately cost the organizations far more than an on-premise deployment would have cost.

Some vendors, like Memex, can provide their onsite deployment as a Capex, leased, subscription, or convertible subscription so an organization can get the best of both worlds. One major challenge to SaaS solutions is the loss of service and data if the provider becomes insoluble and goes out of business. All the investment, procedural changes, time and data, not to mention all of the cost, vaporize. At least with an on-premises solution, the system stays intact and running. In manufacturing, fifty such vendors have disappeared over the last five years.

SaaS has attractive benefits and may be suited for ERP and other low-transaction applications. Still, there may be better options than SaaS when real-time critical data is essential. The disadvantages of SaaS (such as lack of control) are considerable and should not be ignored.